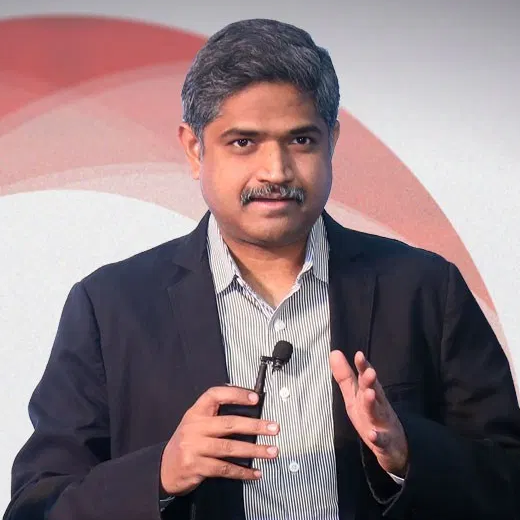

Abdul Majed Raja RS

Senior Analyst , Atlassian

Abdul Majed Raja is an Analytics Consultant helping Organizations make Data-driven Business Decisions. For much of his career, he has worked in analytics in different companies from Public Firms to Start-ups. He’s a passionate trainer of Data Science with R and Python and a Community Builder who leads the Bengaluru R user Group. He’s also a Published Writer on Tech Sites and a strong FOSS supporter and contributor.

Session : Interpretable Machine Learning with Python Examples

Machine Learning Systems are becoming so ubiquitous in our daily life. With the rise of Blackbox models, ML Solutions based on them are often blamed for lack of transparency and hence its trustworthiness on fairness is being questioned. In some of the countries, the law of the land requires the organization that owns the ML Models to explain its predictions. Hence it’s high time for Machine Learning Engineers and Data Scientists to practice Interpretable ML which is also referred to as Explainable AI (XAI).

This talk helps you understand the following:

What is Interpretable ML / Explainable AI ? Why is Interpretability required in Machine Learning? How is it relevant to me? Types of Interpretability (Global & Local) IML with Python References (LIME, SHAP)